프로젝트 목표(변환 1~3)

변환 1

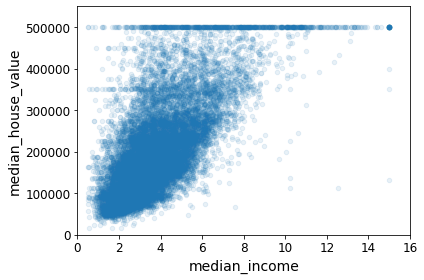

중간 소득과 중간 주택 가격 사이의 상관관계 그래프에서 확인할 수 있는 수평선에 위치한 데이터를 삭제한다.

우선 그래프를 확인해보면 median_house_value의 500,000 값이 집중적으로 존재한다.

housing = strat_train_set.copy()

# housing 값 초기화

housing.plot(kind="scatter", x="median_income", y="median_house_value",

alpha=0.1)

plt.axis([0, 16, 0, 550000])

save_fig("income_vs_house_value_scatterplot")

그림 저장: income_vs_house_value_scatterplot

한번 수치로도 확인해보자. 내림차순으로 정리를 하면 500,001 이라는 값이 있다는 것을 볼 수 있다.

50만 이상인 median_house_value 값을 전부 500,001로 처리한 것이라고 생각된다.

housing.loc[:, ["median_income","median_house_value"]]

| 2.7042 | 286600.0 |

| 6.4214 | 340600.0 |

| 2.8621 | 196900.0 |

| 1.8839 | 46300.0 |

| 3.0347 | 254500.0 |

| ... | ... |

| 4.9312 | 240200.0 |

| 2.0682 | 113000.0 |

| 3.2723 | 97800.0 |

| 4.0625 | 225900.0 |

| 3.5750 | 500001.0 |

16512 rows × 2 columns

housing_sorted_by_values = housing.sort_values(by=["median_income","median_house_value"] ,ascending=False)

housing_sorted_by_values.loc[:, ["median_income","median_house_value"]]

| 15.0001 | 500001.0 |

| 15.0001 | 500001.0 |

| 15.0001 | 500001.0 |

| 15.0001 | 500001.0 |

| 15.0001 | 500001.0 |

| ... | ... |

| 0.4999 | 100000.0 |

| 0.4999 | 90600.0 |

| 0.4999 | 82500.0 |

| 0.4999 | 73500.0 |

| 0.4999 | 56700.0 |

16512 rows × 2 columns

추가로 500,001과 동시에 수평선으로 보이는 것들도 체크해보자.

500,001, 450,000, 350,000, 275,000, 225,000, 187,500, 137,500, 112,500와 같은 수치가 두드러지는 수평선을 보이는 것 같다.

housing_counts = housing.value_counts("median_house_value")

housing_counts.iloc[:20]

median_house_value

500001.0 786

137500.0 102

162500.0 91

112500.0 80

187500.0 76

225000.0 70

350000.0 65

87500.0 59

150000.0 58

175000.0 52

100000.0 51

125000.0 49

275000.0 46

67500.0 43

200000.0 40

250000.0 37

118800.0 28

450000.0 28

75000.0 27

156300.0 27

dtype: int64

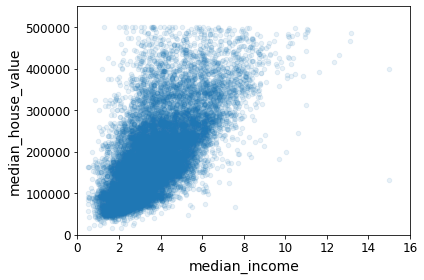

이제 이상치라고 생각하는 부분들을 제거해보자.

Outlier_line = [112500, 137500, 187500, 225000, 275000, 350000, 450000, 500001]

for i in Outlier_line :

housing = housing[housing.median_house_value != i]

확실히 전에 있던 그래프보다 보기 좋아졌다. 그리고 이번 학습에서 이상치 데이터를 가지고 학습하지 않을 것이다.

housing.plot(kind="scatter", x="median_income", y="median_house_value",

alpha=0.1)

plt.axis([0, 16, 0, 550000])

save_fig("income_vs_house_value_scatterplot")

그림 저장: income_vs_house_value_scatterplot

변환 2

회귀 모델 훈련에 사용되는 12개의 특성 중에 세 개는 기존 9개의 특성을 조합하여 생성하였다. 12개의 특성 중에 중간 주택 가격과의 상관계수의 절댓값이 0.2 보다 작은 특성을 삭제한다.

우선 12개의 특성과 중간 주택 가격과의 상관계수를 다시 확인해보자.

housing = strat_train_set.drop("ocean_proximity", axis=1)

#범주형 데이터 "ocean_proximity"는 일단 제거해놓는다.

housing["rooms_per_household"] = housing["total_rooms"]/housing["households"]

housing["bedrooms_per_room"] = housing["total_bedrooms"]/housing["total_rooms"]

housing["population_per_household"]=housing["population"]/housing["households"]

#특성 3개 추가

corr_matrix = housing.corr()

corr_matrix["median_house_value"]

longitude -0.047432

latitude -0.142724

housing_median_age 0.114110

total_rooms 0.135097

total_bedrooms 0.047689

population -0.026920

households 0.064506

median_income 0.687160

median_house_value 1.000000

rooms_per_household 0.146285

bedrooms_per_room -0.259984

population_per_household -0.021985

Name: median_house_value, dtype: float64

당연히 자기 자신(median_house_value)은 상관계수가 1일 것이고, 상관이 없으면 없을수록 수치는 낮을 것이다.

이제 (절대값)corr_matrix에서 0.2보다 낮은 항목을 housing 데이터프레임에서 제거해보자.

corr_matrix2 = corr_matrix["median_house_value"]

delist = []

i = 0

while i < len(housing.columns):

if abs(corr_matrix2[i]) < 0.2:

delist.append(housing.columns[i])

i += 1

housing = housing.drop(delist, axis=1)

housing

| 2.7042 | 286600.0 | 0.223852 |

| 6.4214 | 340600.0 | 0.159057 |

| 2.8621 | 196900.0 | 0.241291 |

| 1.8839 | 46300.0 | 0.200866 |

| 3.0347 | 254500.0 | 0.231341 |

| ... | ... | ... |

| 4.9312 | 240200.0 | 0.185681 |

| 2.0682 | 113000.0 | 0.245819 |

| 3.2723 | 97800.0 | 0.179609 |

| 4.0625 | 225900.0 | 0.193878 |

| 3.5750 | 500001.0 | 0.220355 |

16512 rows × 3 columns

제거된 열을 제외하고 출력된 것을 확인할 수 있다.

corr_matrix = housing.corr()

corr_matrix["median_house_value"]

median_income 0.687160

median_house_value 1.000000

bedrooms_per_room -0.259984

Name: median_house_value, dtype: float64변환 3

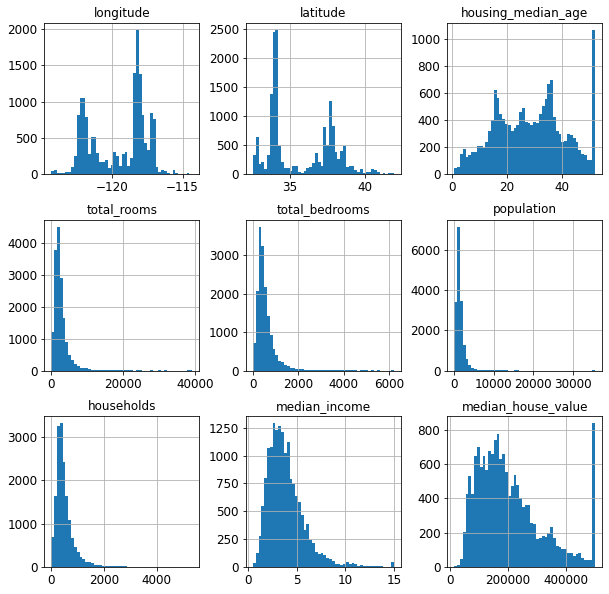

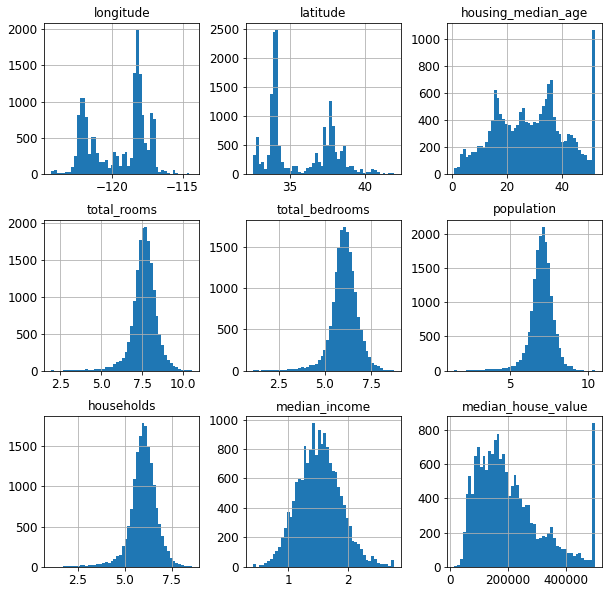

범주형 특성을 제외한 9개 특성별 히스토그램을 보면 일부 특성의 히스토그램이 좌우 비대칭이다. (전문 용어로 왜도(skewness)가 0이 아닐 때 이런 분포가 발생한다.) 대표적으로 방의 총 개수(total_rooms), 침실 총 개수(total_bedrooms), 인구(population), 가구수(households), 중간소득(median_income) 등 다섯 개의 특성이 그렇다. 앞서 언급된 5개 특성 또는 일부에 대해 로그 변환을 적용한다.

우선 다시 housing 데이터프레임을 불러온다.

housing = strat_train_set.copy()

# housing 값 초기화

9개 특성들의 히스토그램을 확인해보면

housing.hist(bins=50,figsize=(10,10))

array([[,

,

],

[,

,

],

[,

,

]],

dtype=object)

여기서 방의 총 개수(total_rooms), 침실 총 개수(total_bedrooms), 인구(population), 가구수(households), 중간소득(median_income)

이 5개의 특성이 좌우 비대칭인 것을 확인할 수 있다.

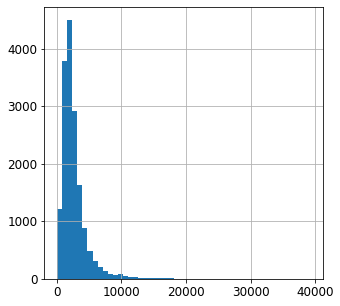

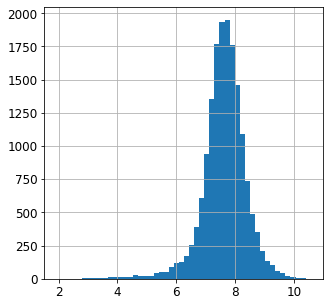

우선 자세하게 total_rooms 특성부터 살펴보자.

housing["total_rooms"].hist(bins=50,figsize=(5,5))

여기서 그냥 numpy를 활용하여 로그변환을 가져와서 시키면 된다.

housing["total_rooms"] = np.log1p(housing["total_rooms"])

밑은 로그 변환 후 히스토그램이다. 확실히 좌우비대칭이 괜찮아졌다.

housing["total_rooms"].hist(bins=50,figsize=(5,5))

다른 4개의 특성도 로그변환을 시키고 히스토그램으로 확인해보면 좌우비대칭 현상이 해결된 것을 확인할 수 있다.

housing["total_bedrooms"] = np.log1p(housing["total_bedrooms"])

housing["population"] = np.log1p(housing["population"])

housing["households"] = np.log1p(housing["households"])

housing["median_income"] = np.log1p(housing["median_income"])

housing.hist(bins=50,figsize=(10,10))

array([[,

,

],

[,

,

],

[,

,

]],

dtype=object)

실제 데이터와 변환 적용한 데이터 비교

1. 원본(수정) (RMSE = 47730.22690385927)

1.1 알고리즘을 위한 데이터 준비

housing = strat_train_set.drop("median_house_value", axis=1) # 훈련 세트를 위해 레이블 삭제

housing_labels = strat_train_set["median_house_value"].copy()

sample_incomplete_rows = housing[housing.isnull().any(axis=1)].head()

sample_incomplete_rows

| -118.30 | 34.07 | 18.0 | 3759.0 | NaN | 3296.0 | 1462.0 | 2.2708 | <1H OCEAN |

| -117.86 | 34.01 | 16.0 | 4632.0 | NaN | 3038.0 | 727.0 | 5.1762 | <1H OCEAN |

| -121.97 | 37.35 | 30.0 | 1955.0 | NaN | 999.0 | 386.0 | 4.6328 | <1H OCEAN |

| -117.30 | 34.05 | 6.0 | 2155.0 | NaN | 1039.0 | 391.0 | 1.6675 | INLAND |

| -122.79 | 38.48 | 7.0 | 6837.0 | NaN | 3468.0 | 1405.0 | 3.1662 | <1H OCEAN |

sample_incomplete_rows.drop("total_bedrooms", axis=1) # 옵션 2

| -118.30 | 34.07 | 18.0 | 3759.0 | 3296.0 | 1462.0 | 2.2708 | <1H OCEAN |

| -117.86 | 34.01 | 16.0 | 4632.0 | 3038.0 | 727.0 | 5.1762 | <1H OCEAN |

| -121.97 | 37.35 | 30.0 | 1955.0 | 999.0 | 386.0 | 4.6328 | <1H OCEAN |

| -117.30 | 34.05 | 6.0 | 2155.0 | 1039.0 | 391.0 | 1.6675 | INLAND |

| -122.79 | 38.48 | 7.0 | 6837.0 | 3468.0 | 1405.0 | 3.1662 | <1H OCEAN |

median = housing["total_bedrooms"].median()

sample_incomplete_rows["total_bedrooms"].fillna(median, inplace=True) # 옵션 3

sample_incomplete_rows

| -118.30 | 34.07 | 18.0 | 3759.0 | 433.0 | 3296.0 | 1462.0 | 2.2708 | <1H OCEAN |

| -117.86 | 34.01 | 16.0 | 4632.0 | 433.0 | 3038.0 | 727.0 | 5.1762 | <1H OCEAN |

| -121.97 | 37.35 | 30.0 | 1955.0 | 433.0 | 999.0 | 386.0 | 4.6328 | <1H OCEAN |

| -117.30 | 34.05 | 6.0 | 2155.0 | 433.0 | 1039.0 | 391.0 | 1.6675 | INLAND |

| -122.79 | 38.48 | 7.0 | 6837.0 | 433.0 | 3468.0 | 1405.0 | 3.1662 | <1H OCEAN |

from sklearn.impute import SimpleImputer

imputer = SimpleImputer(strategy="median")

중간값이 수치형 특성에서만 계산될 수 있기 때문에 텍스트 특성을 삭제합니다.

housing_num = housing.drop("ocean_proximity", axis=1)

# 다른 방법: housing_num = housing.select_dtypes(include=[np.number])

imputer.fit(housing_num)

SimpleImputer(add_indicator=False, copy=True, fill_value=None,

missing_values=nan, strategy='median', verbose=0)imputer.statistics_

array([-118.51 , 34.26 , 29. , 2119.5 , 433. , 1164. ,

408. , 3.5409])

각 특성의 중간 값이 수동으로 계산한 것과 같은지 확인

housing_num.median().values

array([-118.51 , 34.26 , 29. , 2119.5 , 433. , 1164. ,

408. , 3.5409])

훈련 세트를 변환합니다.

X = imputer.transform(housing_num)

housing_tr = pd.DataFrame(X, columns=housing_num.columns,

index=housing_num.index)

housing_tr.loc[sample_incomplete_rows.index.values]

| -118.30 | 34.07 | 18.0 | 3759.0 | 433.0 | 3296.0 | 1462.0 | 2.2708 |

| -117.86 | 34.01 | 16.0 | 4632.0 | 433.0 | 3038.0 | 727.0 | 5.1762 |

| -121.97 | 37.35 | 30.0 | 1955.0 | 433.0 | 999.0 | 386.0 | 4.6328 |

| -117.30 | 34.05 | 6.0 | 2155.0 | 433.0 | 1039.0 | 391.0 | 1.6675 |

| -122.79 | 38.48 | 7.0 | 6837.0 | 433.0 | 3468.0 | 1405.0 | 3.1662 |

imputer.strategy

'median'housing_tr.head()

| -121.89 | 37.29 | 38.0 | 1568.0 | 351.0 | 710.0 | 339.0 | 2.7042 |

| -121.93 | 37.05 | 14.0 | 679.0 | 108.0 | 306.0 | 113.0 | 6.4214 |

| -117.20 | 32.77 | 31.0 | 1952.0 | 471.0 | 936.0 | 462.0 | 2.8621 |

| -119.61 | 36.31 | 25.0 | 1847.0 | 371.0 | 1460.0 | 353.0 | 1.8839 |

| -118.59 | 34.23 | 17.0 | 6592.0 | 1525.0 | 4459.0 | 1463.0 | 3.0347 |

이제 범주형 입력 특성인 ocean_proximity을 전처리합니다:

housing_cat = housing[["ocean_proximity"]]

housing_cat.head(10)

| <1H OCEAN |

| <1H OCEAN |

| NEAR OCEAN |

| INLAND |

| <1H OCEAN |

| INLAND |

| <1H OCEAN |

| INLAND |

| <1H OCEAN |

| <1H OCEAN |

from sklearn.preprocessing import OrdinalEncoder

ordinal_encoder = OrdinalEncoder()

housing_cat_encoded = ordinal_encoder.fit_transform(housing_cat)

housing_cat_encoded[:10]

array([[0.],

[0.],

[4.],

[1.],

[0.],

[1.],

[0.],

[1.],

[0.],

[0.]])ordinal_encoder.categories_

[array(['<1H OCEAN', 'INLAND', 'ISLAND', 'NEAR BAY', 'NEAR OCEAN'],

dtype=object)]from sklearn.preprocessing import OneHotEncoder

cat_encoder = OneHotEncoder()

housing_cat_1hot = cat_encoder.fit_transform(housing_cat)

housing_cat_1hot

<16512x5 sparse matrix of type ''

with 16512 stored elements in Compressed Sparse Row format>

OneHotEncoder는 기본적으로 희소 행렬을 반환한다. 필요하면 toarray() 메서드를 사용해 밀집 배열로 변환할 수 있다.

housing_cat_1hot.toarray()

array([[1., 0., 0., 0., 0.],

[1., 0., 0., 0., 0.],

[0., 0., 0., 0., 1.],

...,

[0., 1., 0., 0., 0.],

[1., 0., 0., 0., 0.],

[0., 0., 0., 1., 0.]])

또는 OneHotEncoder를 만들 때 sparse=False로 지정할 수 있습니다:

cat_encoder = OneHotEncoder(sparse=False)

housing_cat_1hot = cat_encoder.fit_transform(housing_cat)

housing_cat_1hot

array([[1., 0., 0., 0., 0.],

[1., 0., 0., 0., 0.],

[0., 0., 0., 0., 1.],

...,

[0., 1., 0., 0., 0.],

[1., 0., 0., 0., 0.],

[0., 0., 0., 1., 0.]])cat_encoder.categories_

[array(['<1H OCEAN', 'INLAND', 'ISLAND', 'NEAR BAY', 'NEAR OCEAN'],

dtype=object)]from sklearn.preprocessing import OneHotEncoder

cat_encoder = OneHotEncoder()

housing_cat_1hot = cat_encoder.fit_transform(housing_cat)

housing_cat_1hot

<16512x5 sparse matrix of type ''

with 16512 stored elements in Compressed Sparse Row format>#housing = housing.drop("ocean_proximity", axis=1)

import scipy as sp

import numpy as np

one_hot_df = pd.DataFrame(housing_cat_1hot.toarray(), columns=cat_encoder.categories_)

one_hot_df

| 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.0 | 0.0 | 0.0 | 0.0 | 1.0 |

| 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| ... | ... | ... | ... | ... |

| 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 0.0 | 0.0 | 0.0 | 1.0 | 0.0 |

16512 rows × 5 columns

housing = housing.reset_index(drop=True)

pd.concat([housing, one_hot_df], axis = 1)

| -121.89 | 37.29 | 38.0 | 1568.0 | 351.0 | 710.0 | 339.0 | 2.7042 | <1H OCEAN | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| -121.93 | 37.05 | 14.0 | 679.0 | 108.0 | 306.0 | 113.0 | 6.4214 | <1H OCEAN | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| -117.20 | 32.77 | 31.0 | 1952.0 | 471.0 | 936.0 | 462.0 | 2.8621 | NEAR OCEAN | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 |

| -119.61 | 36.31 | 25.0 | 1847.0 | 371.0 | 1460.0 | 353.0 | 1.8839 | INLAND | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| -118.59 | 34.23 | 17.0 | 6592.0 | 1525.0 | 4459.0 | 1463.0 | 3.0347 | <1H OCEAN | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| -118.13 | 34.20 | 46.0 | 1271.0 | 236.0 | 573.0 | 210.0 | 4.9312 | INLAND | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| -117.56 | 33.88 | 40.0 | 1196.0 | 294.0 | 1052.0 | 258.0 | 2.0682 | INLAND | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| -116.40 | 34.09 | 9.0 | 4855.0 | 872.0 | 2098.0 | 765.0 | 3.2723 | INLAND | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| -118.01 | 33.82 | 31.0 | 1960.0 | 380.0 | 1356.0 | 356.0 | 4.0625 | <1H OCEAN | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| -122.45 | 37.77 | 52.0 | 3095.0 | 682.0 | 1269.0 | 639.0 | 3.5750 | NEAR BAY | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 |

16512 rows × 14 columns

추가 특성을 위해 사용자 정의 변환기를 만들어보죠:

from sklearn.base import BaseEstimator, TransformerMixin

# 열 인덱스

rooms_ix, bedrooms_ix, population_ix, households_ix = 3, 4, 5, 6

class CombinedAttributesAdder(BaseEstimator, TransformerMixin):

def __init__(self, add_bedrooms_per_room=True): # *args 또는 **kargs 없음

self.add_bedrooms_per_room = add_bedrooms_per_room

def fit(self, X, y=None):

return self # 아무것도 하지 않습니다

def transform(self, X):

rooms_per_household = X[:, rooms_ix] / X[:, households_ix]

population_per_household = X[:, population_ix] / X[:, households_ix]

if self.add_bedrooms_per_room:

bedrooms_per_room = X[:, bedrooms_ix] / X[:, rooms_ix]

return np.c_[X, rooms_per_household, population_per_household,

bedrooms_per_room]

else:

return np.c_[X, rooms_per_household, population_per_household]

attr_adder = CombinedAttributesAdder(add_bedrooms_per_room=False)

housing_extra_attribs = attr_adder.transform(housing.to_numpy())

책에서는 간단하게 인덱스 (3, 4, 5, 6)을 하드코딩했지만 다음처럼 동적으로 처리하는 것이 더 좋습니다.

col_names = "total_rooms", "total_bedrooms", "population", "households"

rooms_ix, bedrooms_ix, population_ix, households_ix = [

housing.columns.get_loc(c) for c in col_names] # 열 인덱스 구하기

또한 housing_extra_attribs는 넘파이 배열이기 때문에 열 이름이 없습니다(안타깝지만 사이킷런을 사용할 때 생기는 문제입니다).

DataFrame으로 복원하려면 다음과 같이 할 수 있습니다:

housing_extra_attribs = pd.DataFrame(

housing_extra_attribs,

columns=list(housing.columns)+["rooms_per_household", "population_per_household"],

index=housing.index)

housing_extra_attribs.head()

| -121.89 | 37.29 | 38 | 1568 | 351 | 710 | 339 | 2.7042 | <1H OCEAN | 4.62537 | 2.0944 |

| -121.93 | 37.05 | 14 | 679 | 108 | 306 | 113 | 6.4214 | <1H OCEAN | 6.00885 | 2.70796 |

| -117.2 | 32.77 | 31 | 1952 | 471 | 936 | 462 | 2.8621 | NEAR OCEAN | 4.22511 | 2.02597 |

| -119.61 | 36.31 | 25 | 1847 | 371 | 1460 | 353 | 1.8839 | INLAND | 5.23229 | 4.13598 |

| -118.59 | 34.23 | 17 | 6592 | 1525 | 4459 | 1463 | 3.0347 | <1H OCEAN | 4.50581 | 3.04785 |

수치형 특성을 전처리하기 위해 파이프라인을 만듭니다:

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

num_pipeline = Pipeline([

('imputer', SimpleImputer(strategy="median")),

('attribs_adder', CombinedAttributesAdder()),

('std_scaler', StandardScaler()),

])

housing_num_tr = num_pipeline.fit_transform(housing_num)

housing_num_tr

array([[-1.15604281, 0.77194962, 0.74333089, ..., -0.31205452,

-0.08649871, 0.15531753],

[-1.17602483, 0.6596948 , -1.1653172 , ..., 0.21768338,

-0.03353391, -0.83628902],

[ 1.18684903, -1.34218285, 0.18664186, ..., -0.46531516,

-0.09240499, 0.4222004 ],

...,

[ 1.58648943, -0.72478134, -1.56295222, ..., 0.3469342 ,

-0.03055414, -0.52177644],

[ 0.78221312, -0.85106801, 0.18664186, ..., 0.02499488,

0.06150916, -0.30340741],

[-1.43579109, 0.99645926, 1.85670895, ..., -0.22852947,

-0.09586294, 0.10180567]])from sklearn.compose import ColumnTransformer

num_attribs = list(housing_num)

cat_attribs = ["ocean_proximity"]

full_pipeline = ColumnTransformer([

("num", num_pipeline, num_attribs),

("cat", OneHotEncoder(), cat_attribs),

])

housing_prepared = full_pipeline.fit_transform(housing)

housing_prepared

array([[-1.15604281, 0.77194962, 0.74333089, ..., 0. ,

0. , 0. ],

[-1.17602483, 0.6596948 , -1.1653172 , ..., 0. ,

0. , 0. ],

[ 1.18684903, -1.34218285, 0.18664186, ..., 0. ,

0. , 1. ],

...,

[ 1.58648943, -0.72478134, -1.56295222, ..., 0. ,

0. , 0. ],

[ 0.78221312, -0.85106801, 0.18664186, ..., 0. ,

0. , 0. ],

[-1.43579109, 0.99645926, 1.85670895, ..., 0. ,

1. , 0. ]])housing_prepared.shape

(16512, 16)

다음은 (판다스 DataFrame 열의 일부를 선택하기 위해) DataFrameSelector 변환기와 FeatureUnion를 사용한 예전 방식입니다.

from sklearn.base import BaseEstimator, TransformerMixin

# 수치형 열과 범주형 열을 선택하기 위한 클래스

class OldDataFrameSelector(BaseEstimator, TransformerMixin):

def __init__(self, attribute_names):

self.attribute_names = attribute_names

def fit(self, X, y=None):

return self

def transform(self, X):

return X[self.attribute_names].values

하나의 큰 파이프라인에 이들을 모두 결합하여 수치형과 범주형 특성을 전처리합니다.

num_attribs = list(housing_num)

cat_attribs = ["ocean_proximity"]

old_num_pipeline = Pipeline([

('selector', OldDataFrameSelector(num_attribs)),

('imputer', SimpleImputer(strategy="median")),

('attribs_adder', CombinedAttributesAdder()),

('std_scaler', StandardScaler()),

])

old_cat_pipeline = Pipeline([

('selector', OldDataFrameSelector(cat_attribs)),

('cat_encoder', OneHotEncoder(sparse=False)),

])

from sklearn.pipeline import FeatureUnion

old_full_pipeline = FeatureUnion(transformer_list=[

("num_pipeline", old_num_pipeline),

("cat_pipeline", old_cat_pipeline),

])

old_housing_prepared = old_full_pipeline.fit_transform(housing)

old_housing_prepared

array([[-1.15604281, 0.77194962, 0.74333089, ..., 0. ,

0. , 0. ],

[-1.17602483, 0.6596948 , -1.1653172 , ..., 0. ,

0. , 0. ],

[ 1.18684903, -1.34218285, 0.18664186, ..., 0. ,

0. , 1. ],

...,

[ 1.58648943, -0.72478134, -1.56295222, ..., 0. ,

0. , 0. ],

[ 0.78221312, -0.85106801, 0.18664186, ..., 0. ,

0. , 0. ],

[-1.43579109, 0.99645926, 1.85670895, ..., 0. ,

1. , 0. ]])

ColumnTransformer의 결과와 동일합니다.

np.allclose(housing_prepared, old_housing_prepared)

True1.2 모델 선택과 훈련

from sklearn.linear_model import LinearRegression

lin_reg = LinearRegression()

lin_reg.fit(housing_prepared, housing_labels)

LinearRegression(copy_X=True, fit_intercept=True, n_jobs=None, normalize=False)# 훈련 샘플 몇 개를 사용해 전체 파이프라인을 적용해 보겠습니다

some_data = housing.iloc[:5]

some_labels = housing_labels.iloc[:5]

some_data_prepared = full_pipeline.transform(some_data)

print("예측:", lin_reg.predict(some_data_prepared))

예측: [210644.60459286 317768.80697211 210956.43331178 59218.98886849

189747.55849879]

실제 값과 비교합니다.

print("레이블:", list(some_labels))

레이블: [286600.0, 340600.0, 196900.0, 46300.0, 254500.0]

some_data_prepared

array([[-1.15604281, 0.77194962, 0.74333089, -0.49323393, -0.44543821,

-0.63621141, -0.42069842, -0.61493744, -0.31205452, -0.08649871,

0.15531753, 1. , 0. , 0. , 0. ,

0. ],

[-1.17602483, 0.6596948 , -1.1653172 , -0.90896655, -1.0369278 ,

-0.99833135, -1.02222705, 1.33645936, 0.21768338, -0.03353391,

-0.83628902, 1. , 0. , 0. , 0. ,

0. ],

[ 1.18684903, -1.34218285, 0.18664186, -0.31365989, -0.15334458,

-0.43363936, -0.0933178 , -0.5320456 , -0.46531516, -0.09240499,

0.4222004 , 0. , 0. , 0. , 0. ,

1. ],

[-0.01706767, 0.31357576, -0.29052016, -0.36276217, -0.39675594,

0.03604096, -0.38343559, -1.04556555, -0.07966124, 0.08973561,

-0.19645314, 0. , 1. , 0. , 0. ,

0. ],

[ 0.49247384, -0.65929936, -0.92673619, 1.85619316, 2.41221109,

2.72415407, 2.57097492, -0.44143679, -0.35783383, -0.00419445,

0.2699277 , 1. , 0. , 0. , 0. ,

0. ]])from sklearn.metrics import mean_squared_error

housing_predictions = lin_reg.predict(housing_prepared)

lin_mse = mean_squared_error(housing_labels, housing_predictions)

lin_rmse = np.sqrt(lin_mse)

lin_rmse

68628.19819848923

사이킷런 0.22 버전부터는 squared=False 매개변수로 mean_squared_error() 함수를 호출하면 RMSE를 바로 얻을 수 있습니다.

from sklearn.metrics import mean_absolute_error

lin_mae = mean_absolute_error(housing_labels, housing_predictions)

lin_mae

49439.89599001897from sklearn.tree import DecisionTreeRegressor

tree_reg = DecisionTreeRegressor(random_state=42)

tree_reg.fit(housing_prepared, housing_labels)

DecisionTreeRegressor(ccp_alpha=0.0, criterion='mse', max_depth=None,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort='deprecated',

random_state=42, splitter='best')housing_predictions = tree_reg.predict(housing_prepared)

tree_mse = mean_squared_error(housing_labels, housing_predictions)

tree_rmse = np.sqrt(tree_mse)

tree_rmse

0.0from sklearn.model_selection import cross_val_score

scores = cross_val_score(tree_reg, housing_prepared, housing_labels,

scoring="neg_mean_squared_error", cv=10)

tree_rmse_scores = np.sqrt(-scores)

def display_scores(scores):

print("점수:", scores)

print("평균:", scores.mean())

print("표준 편차:", scores.std())

display_scores(tree_rmse_scores)

점수: [70194.33680785 66855.16363941 72432.58244769 70758.73896782

71115.88230639 75585.14172901 70262.86139133 70273.6325285

75366.87952553 71231.65726027]

평균: 71407.68766037929

표준 편차: 2439.4345041191004

lin_scores = cross_val_score(lin_reg, housing_prepared, housing_labels,

scoring="neg_mean_squared_error", cv=10)

lin_rmse_scores = np.sqrt(-lin_scores)

display_scores(lin_rmse_scores)

점수: [66782.73843989 66960.118071 70347.95244419 74739.57052552

68031.13388938 71193.84183426 64969.63056405 68281.61137997

71552.91566558 67665.10082067]

평균: 69052.46136345083

표준 편차: 2731.674001798344

사이킷런 0.22 버전에서 n_estimators의 기본값이 100으로 바뀌기 때문에 향후를 위해 n_estimators=100로 지정

from sklearn.ensemble import RandomForestRegressor

forest_reg = RandomForestRegressor(n_estimators=100, random_state=42)

forest_reg.fit(housing_prepared, housing_labels)

RandomForestRegressor(bootstrap=True, ccp_alpha=0.0, criterion='mse',

max_depth=None, max_features='auto', max_leaf_nodes=None,

max_samples=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

n_estimators=100, n_jobs=None, oob_score=False,

random_state=42, verbose=0, warm_start=False)housing_predictions = forest_reg.predict(housing_prepared)

forest_mse = mean_squared_error(housing_labels, housing_predictions)

forest_rmse = np.sqrt(forest_mse)

forest_rmse

18603.515021376355from sklearn.model_selection import cross_val_score

forest_scores = cross_val_score(forest_reg, housing_prepared, housing_labels,

scoring="neg_mean_squared_error", cv=10)

forest_rmse_scores = np.sqrt(-forest_scores)

display_scores(forest_rmse_scores)

점수: [49519.80364233 47461.9115823 50029.02762854 52325.28068953

49308.39426421 53446.37892622 48634.8036574 47585.73832311

53490.10699751 50021.5852922 ]

평균: 50182.303100336096

표준 편차: 2097.0810550985693

scores = cross_val_score(lin_reg, housing_prepared, housing_labels, scoring="neg_mean_squared_error", cv=10)

pd.Series(np.sqrt(-scores)).describe()

count 10.000000

mean 69052.461363

std 2879.437224

min 64969.630564

25% 67136.363758

50% 68156.372635

75% 70982.369487

max 74739.570526

dtype: float64from sklearn.svm import SVR

svm_reg = SVR(kernel="linear")

svm_reg.fit(housing_prepared, housing_labels)

housing_predictions = svm_reg.predict(housing_prepared)

svm_mse = mean_squared_error(housing_labels, housing_predictions)

svm_rmse = np.sqrt(svm_mse)

svm_rmse

111094.63085399821.3 모델 세부 튜팅

from sklearn.model_selection import GridSearchCV

param_grid = [

# 12(=3×4)개의 하이퍼파라미터 조합을 시도합니다.

{'n_estimators': [3, 10, 30], 'max_features': [2, 4, 6, 8]},

# bootstrap은 False로 하고 6(=2×3)개의 조합을 시도합니다.

{'bootstrap': [False], 'n_estimators': [3, 10], 'max_features': [2, 3, 4]},

]

forest_reg = RandomForestRegressor(random_state=42)

# 다섯 개의 폴드로 훈련하면 총 (12+6)*5=90번의 훈련이 일어납니다.

grid_search = GridSearchCV(forest_reg, param_grid, cv=5,

scoring='neg_mean_squared_error',

return_train_score=True)

grid_search.fit(housing_prepared, housing_labels)

GridSearchCV(cv=5, error_score=nan,

estimator=RandomForestRegressor(bootstrap=True, ccp_alpha=0.0,

criterion='mse', max_depth=None,

max_features='auto',

max_leaf_nodes=None,

max_samples=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

n_estimators=100, n_jobs=None,

oob_score=False, random_state=42,

verbose=0, warm_start=False),

iid='deprecated', n_jobs=None,

param_grid=[{'max_features': [2, 4, 6, 8],

'n_estimators': [3, 10, 30]},

{'bootstrap': [False], 'max_features': [2, 3, 4],

'n_estimators': [3, 10]}],

pre_dispatch='2*n_jobs', refit=True, return_train_score=True,

scoring='neg_mean_squared_error', verbose=0)

최상의 파라미터 조합은 다음과 같습니다:

grid_search.best_params_

{'max_features': 8, 'n_estimators': 30}grid_search.best_estimator_

RandomForestRegressor(bootstrap=True, ccp_alpha=0.0, criterion='mse',

max_depth=None, max_features=8, max_leaf_nodes=None,

max_samples=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

n_estimators=30, n_jobs=None, oob_score=False,

random_state=42, verbose=0, warm_start=False)

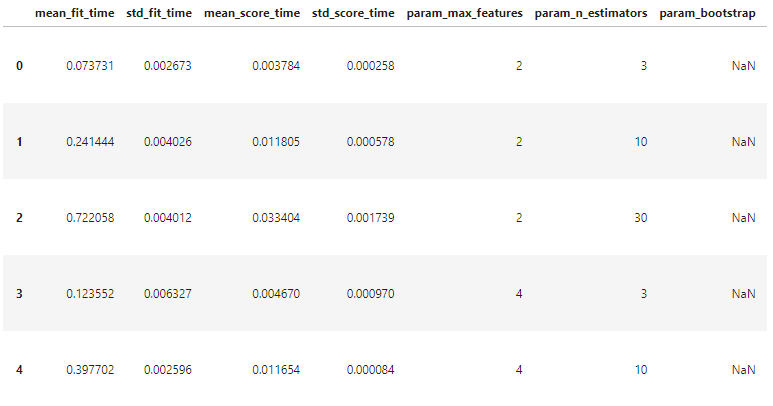

그리드서치에서 테스트한 하이퍼파라미터 조합의 점수를 확인합니다:

cvres = grid_search.cv_results_

for mean_score, params in zip(cvres["mean_test_score"], cvres["params"]):

print(np.sqrt(-mean_score), params)

63669.11631261028 {'max_features': 2, 'n_estimators': 3}

55627.099719926795 {'max_features': 2, 'n_estimators': 10}

53384.57275149205 {'max_features': 2, 'n_estimators': 30}

60965.950449450494 {'max_features': 4, 'n_estimators': 3}

52741.04704299915 {'max_features': 4, 'n_estimators': 10}

50377.40461678399 {'max_features': 4, 'n_estimators': 30}

58663.93866579625 {'max_features': 6, 'n_estimators': 3}

52006.19873526564 {'max_features': 6, 'n_estimators': 10}

50146.51167415009 {'max_features': 6, 'n_estimators': 30}

57869.25276169646 {'max_features': 8, 'n_estimators': 3}

51711.127883959234 {'max_features': 8, 'n_estimators': 10}

49682.273345071546 {'max_features': 8, 'n_estimators': 30}

62895.06951262424 {'bootstrap': False, 'max_features': 2, 'n_estimators': 3}

54658.176157539405 {'bootstrap': False, 'max_features': 2, 'n_estimators': 10}

59470.40652318466 {'bootstrap': False, 'max_features': 3, 'n_estimators': 3}

52724.9822587892 {'bootstrap': False, 'max_features': 3, 'n_estimators': 10}

57490.5691951261 {'bootstrap': False, 'max_features': 4, 'n_estimators': 3}

51009.495668875716 {'bootstrap': False, 'max_features': 4, 'n_estimators': 10}

pd.DataFrame(grid_search.cv_results_)

데이터 전처리와 그리드 탐색을 연결한 파이프라인을 이용하면 전처리 단계에서 설정해야 하는 값들을 일종의 하이퍼파라미터로 다룰 수 있다. 예를 들어,

- CombinedAttributesAdder 클래스의 객체를 생성할 때 지정하는 add_bedrooms_per_room 옵션 변수 값 지정하기

- 이상치 처리하기

- 누락된 값 처리하기

- 특성 선택하기

등에 대해 어떻게 설정하는 것이 좋은지도 함께 찾아준다. 조금은 고급 기술이지만, 지금까지 배운 내용을 이해한다면 어렵지 않게 적용할 수 있는 기술이다. 파이프라인과 그리드 탐색을 연동한 예제들을 아래 사이트에서 살펴볼 수 있다.

from sklearn.model_selection import RandomizedSearchCV

from scipy.stats import randint

param_distribs = {

'n_estimators': randint(low=1, high=200),

'max_features': randint(low=1, high=8),

}

forest_reg = RandomForestRegressor(random_state=42)

rnd_search = RandomizedSearchCV(forest_reg, param_distributions=param_distribs,

n_iter=10, cv=5, scoring='neg_mean_squared_error', random_state=42)

rnd_search.fit(housing_prepared, housing_labels)

RandomizedSearchCV(cv=5, error_score=nan,

estimator=RandomForestRegressor(bootstrap=True,

ccp_alpha=0.0,

criterion='mse',

max_depth=None,

max_features='auto',

max_leaf_nodes=None,

max_samples=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

n_estimators=100,

n_jobs=None, oob_score=Fals...

warm_start=False),

iid='deprecated', n_iter=10, n_jobs=None,

param_distributions={'max_features': ,

'n_estimators': },

pre_dispatch='2*n_jobs', random_state=42, refit=True,

return_train_score=False, scoring='neg_mean_squared_error',

verbose=0)cvres = rnd_search.cv_results_

for mean_score, params in zip(cvres["mean_test_score"], cvres["params"]):

print(np.sqrt(-mean_score), params)

49150.70756927707 {'max_features': 7, 'n_estimators': 180}

51389.889203389284 {'max_features': 5, 'n_estimators': 15}

50796.155224308866 {'max_features': 3, 'n_estimators': 72}

50835.13360315349 {'max_features': 5, 'n_estimators': 21}

49280.9449827171 {'max_features': 7, 'n_estimators': 122}

50774.90662363929 {'max_features': 3, 'n_estimators': 75}

50682.78888164288 {'max_features': 3, 'n_estimators': 88}

49608.99608105296 {'max_features': 5, 'n_estimators': 100}

50473.61930350219 {'max_features': 3, 'n_estimators': 150}

64429.84143294435 {'max_features': 5, 'n_estimators': 2}

feature_importances = grid_search.best_estimator_.feature_importances_

feature_importances

array([7.33442355e-02, 6.29090705e-02, 4.11437985e-02, 1.46726854e-02,

1.41064835e-02, 1.48742809e-02, 1.42575993e-02, 3.66158981e-01,

5.64191792e-02, 1.08792957e-01, 5.33510773e-02, 1.03114883e-02,

1.64780994e-01, 6.02803867e-05, 1.96041560e-03, 2.85647464e-03])extra_attribs = ["rooms_per_hhold", "pop_per_hhold", "bedrooms_per_room"]

#cat_encoder = cat_pipeline.named_steps["cat_encoder"] # 예전 방식

cat_encoder = full_pipeline.named_transformers_["cat"]

cat_one_hot_attribs = list(cat_encoder.categories_[0])

attributes = num_attribs + extra_attribs + cat_one_hot_attribs

sorted(zip(feature_importances, attributes), reverse=True)

[(0.36615898061813423, 'median_income'),

(0.16478099356159054, 'INLAND'),

(0.10879295677551575, 'pop_per_hhold'),

(0.07334423551601243, 'longitude'),

(0.06290907048262032, 'latitude'),

(0.056419179181954014, 'rooms_per_hhold'),

(0.053351077347675815, 'bedrooms_per_room'),

(0.04114379847872964, 'housing_median_age'),

(0.014874280890402769, 'population'),

(0.014672685420543239, 'total_rooms'),

(0.014257599323407808, 'households'),

(0.014106483453584104, 'total_bedrooms'),

(0.010311488326303788, '<1H OCEAN'),

(0.0028564746373201584, 'NEAR OCEAN'),

(0.0019604155994780706, 'NEAR BAY'),

(6.0280386727366e-05, 'ISLAND')]결과를 확인해보면 rmse = 47730.22690385927 값이 나오는 것을 확인할 수 있다.

final_model = grid_search.best_estimator_

X_test = strat_test_set.drop("median_house_value", axis=1)

y_test = strat_test_set["median_house_value"].copy()

X_test_prepared = full_pipeline.transform(X_test)

final_predictions = final_model.predict(X_test_prepared)

final_mse = mean_squared_error(y_test, final_predictions)

final_rmse = np.sqrt(final_mse)

final_rmse

47730.22690385927

테스트 RMSE에 대한 95% 신뢰 구간을 계산할 수 있습니다.

from scipy import stats

confidence = 0.95

squared_errors = (final_predictions - y_test) ** 2

np.sqrt(stats.t.interval(confidence, len(squared_errors) - 1,

loc=squared_errors.mean(),

scale=stats.sem(squared_errors)))

array([45685.10470776, 49691.25001878])

다음과 같이 수동으로 계산할 수도 있습니다.

m = len(squared_errors)

mean = squared_errors.mean()

tscore = stats.t.ppf((1 + confidence) / 2, df=m - 1)

tmargin = tscore * squared_errors.std(ddof=1) / np.sqrt(m)

np.sqrt(mean - tmargin), np.sqrt(mean + tmargin)

(45685.10470776, 49691.25001877858)

또는 t-점수 대신 z-점수를 사용할 수도 있습니다:

zscore = stats.norm.ppf((1 + confidence) / 2)

zmargin = zscore * squared_errors.std(ddof=1) / np.sqrt(m)

np.sqrt(mean - zmargin), np.sqrt(mean + zmargin)

(45685.717918136455, 49690.68623889413)full_pipeline_with_predictor = Pipeline([

("preparation", full_pipeline),

("linear", LinearRegression())

])

full_pipeline_with_predictor.fit(housing, housing_labels)

full_pipeline_with_predictor.predict(some_data)

array([210644.60459286, 317768.80697211, 210956.43331178, 59218.98886849,

189747.55849879])my_model = full_pipeline_with_predictor

import joblib

joblib.dump(my_model, "my_model.pkl") # DIFF

#...

my_model_loaded = joblib.load("my_model.pkl") # DIFF

원본 데이터 세트로 시스템 평가를 진행하면 rmse 값은 47730.22690385927이 나온다.

추가 특성을 위해 사용자 정의 변환기를 만들어보기.

from sklearn.base import BaseEstimator, TransformerMixin

# 열 인덱스

rooms_ix, bedrooms_ix, population_ix, households_ix = 3, 4, 5, 6

class CombinedAttributesAdder(BaseEstimator, TransformerMixin):

def __init__(self, add_bedrooms_per_room=True): # *args 또는 **kargs 없음

self.add_bedrooms_per_room = add_bedrooms_per_room

def fit(self, X, y=None):

return self # 아무것도 하지 않습니다

def transform(self, X):

rooms_per_household = X[:, rooms_ix] / X[:, households_ix]

population_per_household = X[:, population_ix] / X[:, households_ix]

if self.add_bedrooms_per_room:

bedrooms_per_room = X[:, bedrooms_ix] / X[:, rooms_ix]

return np.c_[X, rooms_per_household, population_per_household,

bedrooms_per_room]

else:

return np.c_[X, rooms_per_household, population_per_household]

attr_adder = CombinedAttributesAdder(add_bedrooms_per_room=False)

housing_extra_attribs = attr_adder.transform(housing.to_numpy())

In [128]:

col_names = "total_rooms", "total_bedrooms", "population", "households"

rooms_ix, bedrooms_ix, population_ix, households_ix = [

housing.columns.get_loc(c) for c in col_names] # 열 인덱스 구하기

In [129]:

housing_extra_attribs = pd.DataFrame(

housing_extra_attribs,

columns=list(housing.columns)+["rooms_per_household", "population_per_household"],

index=housing.index)

housing_extra_attribs.head()

| -121.89 | 37.29 | 38 | 1568 | 351 | 710 | 339 | 2.7042 | <1H OCEAN | 4.62537 | 2.0944 |

| -121.93 | 37.05 | 14 | 679 | 108 | 306 | 113 | 6.4214 | <1H OCEAN | 6.00885 | 2.70796 |

| -117.2 | 32.77 | 31 | 1952 | 471 | 936 | 462 | 2.8621 | NEAR OCEAN | 4.22511 | 2.02597 |

| -119.61 | 36.31 | 25 | 1847 | 371 | 1460 | 353 | 1.8839 | INLAND | 5.23229 | 4.13598 |

| -118.59 | 34.23 | 17 | 6592 | 1525 | 4459 | 1463 | 3.0347 | <1H OCEAN | 4.50581 | 3.04785 |

from sklearn.model_selection import GridSearchCV

param_grid = [

# 12(=3×4)개의 하이퍼파라미터 조합을 시도합니다.

{'n_estimators': [3, 10, 30], 'max_features': [2, 4, 6, 8]},

# bootstrap은 False로 하고 6(=2×3)개의 조합을 시도합니다.

{'bootstrap': [False], 'n_estimators': [3, 10], 'max_features': [2, 3, 4]},

]

forest_reg = RandomForestRegressor(random_state=42)

# 다섯 개의 폴드로 훈련하면 총 (12+6)*5=90번의 훈련이 일어납니다.

grid_search = GridSearchCV(forest_reg, param_grid, cv=5,

scoring='neg_mean_squared_error',

return_train_score=True)

grid_search.fit(housing_prepared, housing_labels)

GridSearchCV(cv=5, error_score=nan,

estimator=RandomForestRegressor(bootstrap=True, ccp_alpha=0.0,

criterion='mse', max_depth=None,

max_features='auto',

max_leaf_nodes=None,

max_samples=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

n_estimators=100, n_jobs=None,

oob_score=False, random_state=42,

verbose=0, warm_start=False),

iid='deprecated', n_jobs=None,

param_grid=[{'max_features': [2, 4, 6, 8],

'n_estimators': [3, 10, 30]},

{'bootstrap': [False], 'max_features': [2, 3, 4],

'n_estimators': [3, 10]}],

pre_dispatch='2*n_jobs', refit=True, return_train_score=True,

scoring='neg_mean_squared_error', verbose=0)

최상의 파라미터 조합은 다음과 같습니다:

grid_search.best_params_

{'max_features': 8, 'n_estimators': 30}grid_search.best_estimator_

RandomForestRegressor(bootstrap=True, ccp_alpha=0.0, criterion='mse',

max_depth=None, max_features=8, max_leaf_nodes=None,

max_samples=None, min_impurity_decrease=0.0,

min_impurity_split=None, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

n_estimators=30, n_jobs=None, oob_score=False,

random_state=42, verbose=0, warm_start=False)

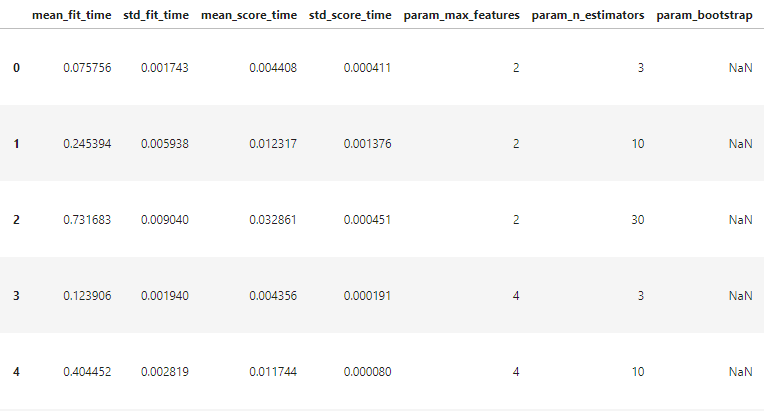

그리드서치에서 테스트한 하이퍼파라미터 조합의 점수를 확인합니다:

cvres = grid_search.cv_results_

for mean_score, params in zip(cvres["mean_test_score"], cvres["params"]):

print(np.sqrt(-mean_score), params)

63669.11631261028 {'max_features': 2, 'n_estimators': 3}

55627.099719926795 {'max_features': 2, 'n_estimators': 10}

53384.57275149205 {'max_features': 2, 'n_estimators': 30}

60965.950449450494 {'max_features': 4, 'n_estimators': 3}

52741.04704299915 {'max_features': 4, 'n_estimators': 10}

50377.40461678399 {'max_features': 4, 'n_estimators': 30}

58663.93866579625 {'max_features': 6, 'n_estimators': 3}

52006.19873526564 {'max_features': 6, 'n_estimators': 10}

50146.51167415009 {'max_features': 6, 'n_estimators': 30}

57869.25276169646 {'max_features': 8, 'n_estimators': 3}

51711.127883959234 {'max_features': 8, 'n_estimators': 10}

49682.273345071546 {'max_features': 8, 'n_estimators': 30}

62895.06951262424 {'bootstrap': False, 'max_features': 2, 'n_estimators': 3}

54658.176157539405 {'bootstrap': False, 'max_features': 2, 'n_estimators': 10}

59470.40652318466 {'bootstrap': False, 'max_features': 3, 'n_estimators': 3}

52724.9822587892 {'bootstrap': False, 'max_features': 3, 'n_estimators': 10}

57490.5691951261 {'bootstrap': False, 'max_features': 4, 'n_estimators': 3}

51009.495668875716 {'bootstrap': False, 'max_features': 4, 'n_estimators': 10}

pd.DataFrame(grid_search.cv_results_)

너무 길어져서 다음 장에 계속